Georgia Gkioxari

I am an assistant professor in the Computing & Mathematical Sciences department at Caltech and a Hurt Scholar. I also spend time with the FAIR Perception team at Meta AI. Previously, I was a research scientist at Meta's FAIR team. I completed my PhD at UC Berkeley with Jitendra Malik and my undergraduate studies at NTUA, Greece, where I worked with Petros Maragos.

I am the recipient of the PAMI Young Researcher Award (2021), a Google Faculty Award (2024), the Okawa Research Award (2024) and the Amazon Research Award (2024). My teammates and I received the PAMI Mark Everingham Award (2021) for the Detectron Library Suite. I was named one of 30 influential women advancing AI in 2019 by ReWork and was nominated for the Women in AI Awards in 2020 by VentureBeat. Read more about me and my work in this Q&A.

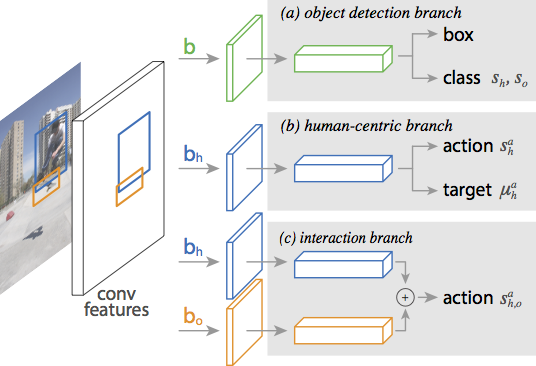

2D Perception

2D Perception

3D Perception

3D Perception

Spatial Reasoning

Spatial Reasoning

Ilona Demler

Ilona Demler Ziqi Ma

Ziqi Ma Damiano Marsili

Damiano Marsili Aadarsh Sahoo

Aadarsh Sahoo Sabera Talukder

Sabera Talukder Raphi Kang

Raphi Kang